TL;DR: Project Valhalla is an effort make Java more efficient when dealing with primitive-like objects. While still being experimental, it significantly cuts down object allocations and achieves up to a 2x speed increase (on some platforms) in our example task. Yet, in its current state, Valhalla should be applied together with careful benchmarking because performance regressions are also possible. For more details, please read on.

Recently, I've discovered this guide which walks you through implementing a toy ray tracer from scratch. I took the implementation written in Clojure by Jeaye Wilkerson and wrote my own in Java to see if the two would have comparable performance. As I went through the task, it became clear that the bulk of computational work involves performing math operations with geometric vectors. Considering that the JVM allocates these vector objects on the heap and then furiously garbage-collects them, I couldn't help but wonder if this is an excellent opportunity to showcase the potential of Project Valhalla. Equipped with this insight and my regular performance tools, I conducted a couple experiments, and now I'm ready to share the results with you.

In the current version of Java, each object has an identity. Identity serves as an abstract theoretical concept that lets us tell apart two distinct objects without looking at their contents. This is really a single property that having an identity guarantees; everything else that comes with it is an implementation detail. But most of the time, we are more interested exactly in those implementation details and practical implications of having an identity, and those are:

==.System.identityHashCode().synchronized block.Feature 1 is attained by objects being reference types, each with its own pointer. If two pointers are equal, they point at the same object. Features 2-4 are fulfilled by each object having a header field.

Project Valhalla is a major effort to introduce value objects to Java. However, the term value is heavily overloaded and ambiguous. A more accurate name for these objects would be identityless, as the core objective is to eliminate their identity. For what purpose? Here are some specific optimizations you could do to such objects then:

Naturally, these changes may be beneficial for program performance due to better memory locality (3), improved cache utilization (1, 3), reduced strain on the garbage collector (2). Interestingly, none of the above optimizations are explicitly guaranteed by the spec. Valhalla allows developers to define value objects but then lets the virtual machine determine how each object will be presented in memory and which optimizations can be applied. This gives a lot of flexibility to the designers of the language and the VM implementers. For the users, however, it could feel clunky because there is no way to enforce or ascertain at compile time whether a particular object will be inlined, stripped of its header, or scalarized. Consequently, additional runtime introspection becomes necessary to validate our assumptions.

Project Valhalla has been in development for several years and is shipped piecewise. Some of its prerequisites have already landed into the JDK proper, but Valhalla itself is not in the upstream yet. Fortunately for us, early access builds are available for everyone to experiment with the new features, which is precisely what we are going to do.

First, we need to download an early access build from

here. It is a tar.gz archive; all you need

is to unpack it, set the full path as JAVA_HOME env variable, and add the

bin subdirectory to your PATH. The best way to do it depends on your

platform/distro. On MacOS, I move the extracted build to

/Library/Java/JavaVirtualMachines/ and execute the following command in the

shell to activate it:

$ export JAVA_HOME=$(/usr/libexec/java_home -v 20)

$ java -version

openjdk version "20-valhalla" 2023-03-21

OpenJDK Runtime Environment (build 20-valhalla+1-75)

OpenJDK 64-Bit Server VM (build 20-valhalla+1-75, mixed mode, sharing)

Sweet, with the Valhalla-enabled JDK installed, we are ready to proceed.

You can find the source code for my ray tracer implementation here. It uses POJOs to represent all entities in the program, namely:

You can download the project and then run the ray tracer like this:

$ ./run.sh 500 20 out.png

render: imageWidth=500 samplesPerPixel=20 file=out.png

Elapsed: 13,711 ms

Allocated: 3,388 MB

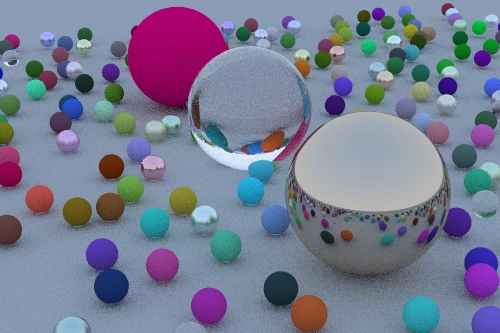

On my Macbook Pro M1 2020, it takes ~13.7 seconds to produce this image with the supplied arguments, and the program allocates 3.4 Gb worth of objects[2]. Let's figure out what kind of workload dominates in the tracer. I'm going to use clj-async-profiler; for any non-Clojurists reading this, you'll want to use async-profiler directly. In my case, I start a REPL with the Java classes already compiled and do this:

user=> (require '[clj-async-profiler.core :as prof])

user=> (prof/profile (raytracer.Render/render 500 20 "out.ong"))

The profiler tells us, without much surprise, that the biggest contributor to execution time is calculating intersections between rays and spheres. This portion of the code heavily relies on performing mathematical operations such as vector dot products, subtractions, and other mathematical computations. Let's see what types of objects are being allocated and where.

user=> (prof/profile {:event :alloc} (raytracer.Render/render 500 20 "out.ong"))

Click "Reversed" and "Apply" to discover that Vec3 objects account for 77% of all allocations. Again, not surprising so far. It is time to put Valhalla into action.

In theory, it should be enough to mark the class with a new special keyword

value to start reaping the benefits. Importantly, you must not use the

identity of objects of that class anywhere (i.e., synchronize on them or use

them as keys in IdentityHashTable). Let's start small and make Vec3 a value

object since it is the primary cause of allocations. Here's what its definition

should look like:

public value class Vec3 {

$ ./run.sh 500 20 out.png

render: imageWidth=500 samplesPerPixel=20 file=out.png

Elapsed: 15,415 ms

Allocated: 1,965 MB

Ain't that weird. We shaved off 1.5 Gb of allocations, but the elapsed time went up instead? At least, let's analyze where the allocations disappeared. To achieve that, I profiled the allocations for this version and then generated a diffgraph between the two flamegraphs; here it is:

Frames colored in blue represent the code where fewer allocations happen after

our change; red frames stand for code with increased allocations. Intuitively,

there shouldn't be any of the latter, so I attribute the red parts of the

diffgraph to either profiler inaccuracies or JVM migrating some allocations to

different code paths. We can observe significant skid — for example, you may

find Vec allocations within RandomGenerator methods (which couldn't be there).

But you can still click around, look at the Reversed view, and see the overall

55% reduction of Vec3 allocations which is equal to -42% of total allocations.

But the JVM could not or did not consider it necessary to remove all heap

allocations of it.

Perhaps, by only making Vec3 a value object, we are not utilizing the Valhalla

potential to its fullest. Considering Vec3's presence inside most other

classes, marking those as value classes may enhance data inlining and deliver

the performance boost we crave. Here are the modified lines (compared to the

initial version):

src/raytracer/HitRecord.java:3: public value class HitRecord {

src/raytracer/Scatter.java:3: public value class Scatter {

src/raytracer/Sphere.java:3: public value class Sphere {

src/raytracer/Ray.java:3: public value class Ray {

src/raytracer/Material.java:7: public static value class Lambertian implements Material {

src/raytracer/Material.java:24: public static value class Metal implements Material {

src/raytracer/Material.java:46: public static value class Dielectric implements Material {

src/raytracer/Vec3.java:3: public value class Vec3 {

By rerunning it, we get:

$ ./run.sh 500 20 out.png

render: imageWidth=500 samplesPerPixel=20 file=out.png

Elapsed: 15,086 ms

Allocated: 1,308 MB

Despite reducing the allocations further, the elapsed time is still higher than in the POJO version. The allocation diffgraph between the initial version and the all-value one is, as expected, predominantly blue. However, the CPU flamegraph reveals something new.

A few methods began to call an internal JVM function

BarrierSetNMethod::nmethod_stub_entry_barrier. Judging from its name, I assume

it is a memory barrier generated by JVM in places where the program invokes

native methods. Since we don't call any native code ourselves, it must be that

the JVM started generating these barriers after we've replaced plain objects

with value objects. The total runtime contribution of barriers is 30% (you can

write Barrier into the highlight field and press Highlight), and it is unclear

whether this behavior is unique to ARM and/or MacOS. In our specific example, it

appears that these barriers negate the performance benefits gained from using

value classes.

Value objects are not the only new concept introduced by Project Valhalla. At

some point, Valhalla designers decided to split their initial idea into two

distinct incarnations — value objects and primitive objects. While they both

lack the property of identity, there are differences between them — primitive

objects cannot be null or contain non-primitive fields. If you can satisfy

both requirements, then making the class a primitive instead of value can

(theoretically) bring an even greater performance uplift.

Let's do it gradually again by starting with our most active class, Vec3. The

updated definition should say:

public primitive class Vec3 {

$ ./run.sh 500 20 out.png

render: imageWidth=500 samplesPerPixel=20 file=out.png

Elapsed: 12,617 ms

Allocated: 224 MB

We're finally getting somewhere! It's encouraging to see that the runtime is at least on par with, or slightly better than, the POJO variant, and the allocations are nearly all gone. Let's keep going and transform everything into primitives:

src/raytracer/HitRecord.java:3: public primitive class HitRecord {

src/raytracer/Scatter.java:3: public primitive class Scatter {

src/raytracer/Sphere.java:3: public primitive class Sphere {

src/raytracer/Ray.java:3: public primitive class Ray {

src/raytracer/Material.java:7: public static primitive class Lambertian implements Material {

src/raytracer/Material.java:24: public static primitive class Metal implements Material {

src/raytracer/Material.java:46: public static primitive class Dielectric implements Material {

src/raytracer/Vec3.java:3: public primitive class Vec3 {

$ ./run.sh 500 20 out.png

render: imageWidth=500 samplesPerPixel=20 file=out.png

Elapsed: 12,285 ms

Allocated: 275 MB

Confusingly enough, allocations have slightly increased, and the runtime got a

bit lower. But overall, there's little effect from transitioning the remaining

classes to primitives. Taking a CPU profile of the all-primitive yields a

picture similar to the all-value version — memory barriers are still present in

the profile, and they seem to cause the slowdown. Here's a CPU diffgraph between

all-value and all-primitive builds; see that the biggest difference is the

vanishing of Vec3.<vnew> (a new special method akin to <init> but for value

classes) from the Sphere.hit stacktrace. Making Vec3 a primitive might have

enabled its scalarization instead of creating a full object; but I'm speculating

here.

To be honest, I was a bit disappointed by these results. Not disappointed with Valhalla, of course, but rather with my own high hopes of significant improvement in this task. I know that Project Valhalla is still in flux, implementation and optimizations will change many times until the release, and plenty of confounding variables are at play, such as CPU architecture, platform, and my poor understanding of the topic. And yet, my enthusiasm dwindled, the sky turned grey, and I became sad. Was writing this article a pure waste of effort?

One thing that still bothered me was that I had no access to CPU performance counters during this test. M1 provides some counters, and there is a way to track them, but nothing is readily available for Java yet. And I don't have another physical computer beyond my laptop, just unsuitable consumer devices — a home server built on ARM SBC, an ARM phone, an ARM tablet, a Steam Deck...

A Steam Deck! A portable computer that's based on an amd64 CPU and runs a fully-featured Arch Linux derivative. You can SSH onto it, install packages, and launch any software, just like on a regular computer. Is there a reason not to repeat the benchmarks on it? I couldn't find any.

| Plain objects | Value objects | Primitive objects | |

|---|---|---|---|

| Execution time | 22.7 sec | 48.4 sec | 12.0 sec |

| Allocated heap | 3.4 GB | 1.7 GB | 1.4 GB |

The first thing to notice is that the value class version has a staggering 2x runtime degradation compared to plain classes, despite the shrinking allocations. This performance degradation also manifested itself on M1, albeit to a lesser extent. Nonetheless, we have finally witnessed the long-awaited improvement — the all-primitives version has beaten the POJO version in speed by a remarkable 1.9x! What's even more fascinating is that it surpassed the best M1 result. Notably, it was achieved while still allocating 1.4 GB worth of objects, compared to ~200 MB on M1. This underscores how differently Valhalla might behave depending on the platform it runs on.

I tried profiling all three versions on the Steam Deck with clj-async-profiler, but the results showed nothing of interest. This may be attributed to the absence of debug symbols for this early access build of the JDK. Instead, here is me running the POJO version through perf:

$ perf stat -e cycles,instructions,L1-dcache-load-misses,L1-dcache-loads,L1-icache-load-misses\

,L1-icache-loads,branch-misses,branches,dTLB-load-misses,dTLB-loads,iTLB-load-misses,iTLB-loads\

,stalled-cycles-backend,stalled-cycles-frontend -- ./run.sh 500 20 out.png

render: imageWidth=500 samplesPerPixel=20 file=out.png

Elapsed: 22,992 ms

Allocated: 3,397 MB

Performance counter stats for './run.sh 500 20 out.png':

90,554,326,338 cycles (35.89%)

281,979,878,536 instructions # 3.11 insn per cycle

# 0.18 stalled cycles per insn (35.90%)

10,244,854,583 L1-dcache-load-misses # 7.06% of all L1-dcache accesses (35.83%)

145,092,667,792 L1-dcache-loads (35.73%)

36,231,776 L1-icache-load-misses # 1.75% of all L1-icache accesses (35.91%)

2,075,693,944 L1-icache-loads (35.91%)

103,496,590 branch-misses # 0.89% of all branches (35.97%)

11,573,112,791 branches (36.01%)

6,632,725 dTLB-load-misses # 9.70% of all dTLB cache accesses (36.11%)

68,374,293 dTLB-loads (36.08%)

2,316,490 iTLB-load-misses # 12.57% of all iTLB cache accesses (36.06%)

18,435,572 iTLB-loads (36.01%)

51,576,704,766 stalled-cycles-backend # 56.96% backend cycles idle (36.01%)

1,194,470,957 stalled-cycles-frontend # 1.32% frontend cycles idle (35.87%)

When specifying the performance counters of interest using the -e argument,

it's important to note that a CPU has limited hardware registers available for

tracking these counters. So, when requesting more PMCs than available registers,

perf employs a technique called multiplexing. It switches between tracking

different events and, in the end, interpolates the results. This is fine for us

because the workload of our benchmark is uniform, allowing us to gather the

overall picture from partial results. The percentages within parentheses in perf

output indicate how long the event has been actually tracked.

This is my naïve interpretation of the provided perf output:

I then ran the remaining two versions with perf, and here's the comparison table:

| PMC | POJO | Value | Primitive |

|---|---|---|---|

| cycles | 90,554M | 179,880M | 50,008M |

| instructions | 281,980M | 434,353M | 162,894M |

| IPC | 3.11 | 2.41 | 3.26 |

| L1-dcache-load-misses | 10,245M | 9,774M | 1,821M |

| L1-dcache-loads | 145,093M | 303,624M | 30,032M |

| L1-dcache-miss% | 7.06% | 3.22% | 6.06% |

| L1-icache-load-misses | 36.2M | 38.0M | 36.4M |

| L1-icache-loads | 2,076M | 2,350M | 1,922M |

| L1-icache-miss% | 1.75% | 1.62% | 1.89% |

| branch-misses | 103.5M | 104.3M | 103.1M |

| branches | 11,573M | 12,211M | 11,298M |

| branch-miss% | 0.89% | 0.85% | 0.91% |

| dTLB-load-misses | 6.6M | 5.8M | 5.5M |

| dTLB-loads | 68.4M | 66.2M | 63.6M |

| dTLB-miss% | 9.70% | 8.80% | 8.59% |

| iTLB-load-misses | 2.3M | 2.1M | 2.0M |

| iTLB-loads | 18.4M | 20.0M | 20.3M |

| iTLB-miss% | 12.57% | 10.70% | 9.86% |

| stalled-cycles-backend% | 56.96% | 69.91% | 41.97% |

| stalled-cycles-frontend% | 1.32% | 0.69% | 2.13% |

Let's start with the numbers for the value class version. Somehow, it had to retire 54% more instructions than the POJO version and with a worse IPC of 2.41. It exhibited twice as many L1d reads (basically, memory reads) despite maintaining the same number of misses. L1i access, TLB access, and branch prediction stats don't differ a lot from POJO. But the higher stalled backend cycles percentage (69.91%) makes me think it's not solely the shortage of FPUs causing the bottleneck, but perhaps also AGUs (cue the increased memory reads).

Now I'll look at the top dog — the primitives version. By some miracle, its number of instructions is 45% lower than in POJO and with the same stellar IPC. The amount of L1d accesses is a whopping 4.8x lower, and the number of misses is 5.6x lower. This suggests that Valhalla managed to successfully scalarize a whole lot of operations and keep the data in the registers without roundtripping through memory. L1i access, TLB access, and branching remain the same or marginally better than in POJO. Finally, stalled backend cycles are significantly lower at 41.97%, reaffirming my latest hypothesis that it's the memory address generation that's causing the stalls, not just the floating point math.

The benchmark results on M1 demonstrated that I unfairly expected more swing from Valhalla than this task had space for. For instance, this ray tracer implementation doesn't rely on many arrays of composite objects where structure inlining would help the performance. Most of the data structures used are ephemeral and processed at the very place they are created. Sure, you have to allocate them on the heap, but heap allocations are actually incredibly cheap in Java. And the impact of GC turned out to be minimal for this workload.

The Steam Deck results, on the other hand, proved that Valhalla has plenty of potential. Making an already efficient program run twice faster by simply adding a few keywords is a big deal. This, to me, is the strongest part of Project Valhalla — it offers a powerful facility available on demand and a la carte. It costs almost nothing to try out Valhalla for your program (once it hits the JDK upstream), and if it does work, then — hey — why say no to free performance?

That being said, if Valhalla ships in its present state, with the current means of introspection and awareness (which is to say — missing), it may become an advanced tool for highly specialized cases[3]. What I've experienced so far is that the approach to applying Valhalla optimizations is very stochastic; their effects are unpredictable and require empiric evaluation. While it's feasible in a toy project like this, it could become overwhelming in a large real-world application to go through the possible combinations of value and primitive classes and guess how the JVM would behave. I hope that over time, we'll get better instruments to illuminate what's going on with the data objects under the covers of the JVM.

This concludes my exploration of Project Valhalla for now. I'm excited about the direction Java is heading, grateful for the careful evolution approach taken by Java's designers, and happy that I did this experiment and learned more about these cool new developments. I hope you are, too. Give Valhalla a try, and thank you for reading!